Artificial Intelligence (AI) has matured far beyond its early role as a tool for classification, prediction, and process automation. In 2025, the field is witnessing the rise of Agentic AI – systems that do not merely react to prompts or predefined triggers but act as autonomous, context-aware collaborators within enterprise workflows.

Unlike conventional AI models that require structured input and yield bounded outputs, agentic systems are capable of reasoning over complex states, initiating actions without explicit human instruction, and adapting dynamically to evolving objectives. For data science professionals, this represents a paradigm shift as profound as the original transition from descriptive analytics to predictive modeling.

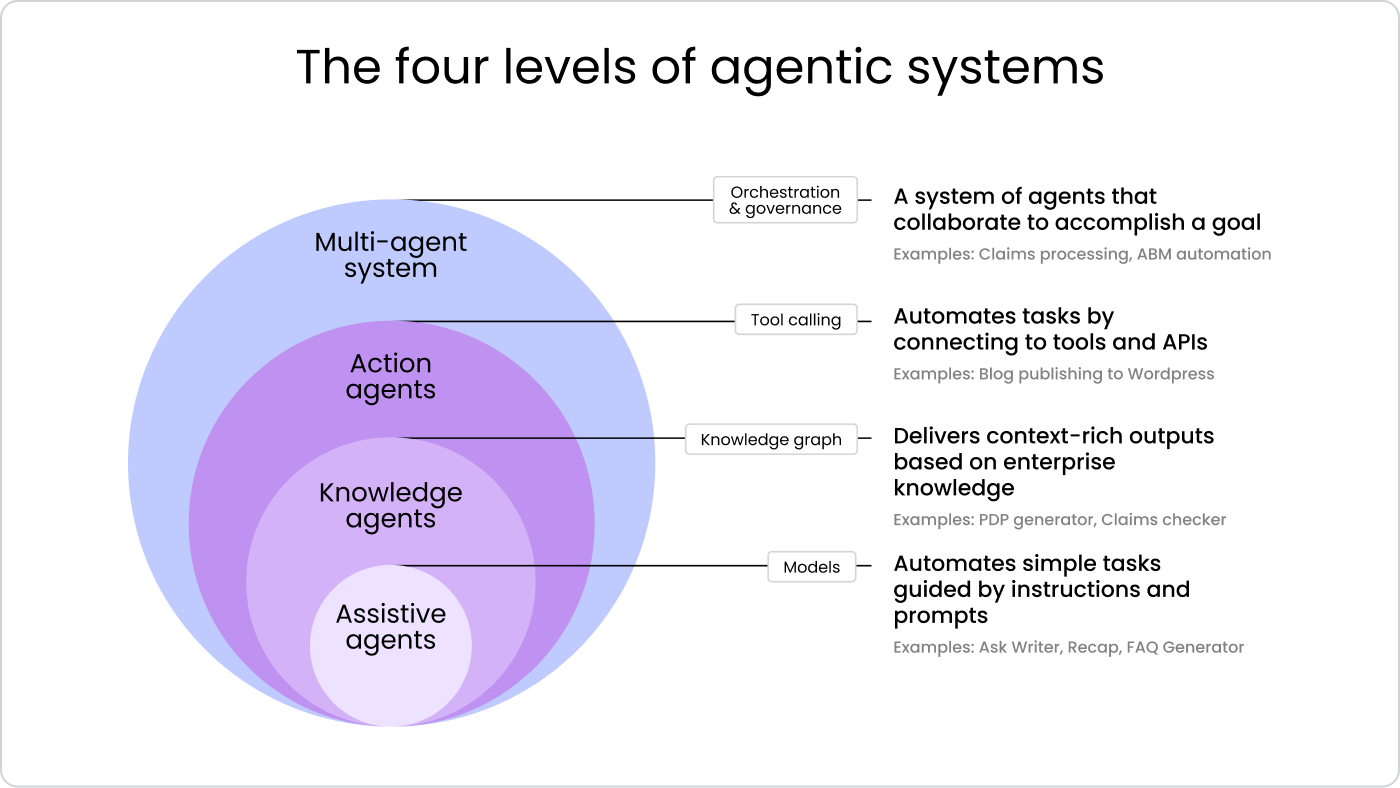

At its core, Agentic AI refers to AI agents endowed with autonomy, proactivity, and adaptability. These systems differ from traditional AI pipelines in several ways:

Autonomy: They can initiate tasks, make decisions, and reconfigure strategies without constant human prompting.

Context Awareness: They maintain an internal model of environment, goals, and constraints, allowing them to interpret ambiguous instructions and align with broader objectives.

Iterative Reasoning: Unlike static inference pipelines, agentic AI employs recursive reasoning loops, planning, and self-evaluation.

Collaboration: These agents are designed to interface with humans and other agents, integrating seamlessly into multi-agent ecosystems.

In practical terms, this evolution shifts AI from being a passive service –"ask and receive" – into an active digital collaborator capable of managing workflows end-to-end.

Historically, enterprise AI has been reactive. Machine learning models were trained to detect fraud, recommend products, or classify medical images based on provided inputs. Even reinforcement learning (RL) systems were designed to maximize predefined reward functions within constrained environments. These systems excelled at prediction but lacked the initiative to act beyond narrow task boundaries.

Agentic AI alters this dynamic by incorporating:

Planning and Goal-Oriented Behavior: Drawing from advances in hierarchical reinforcement learning, agentic systems can decompose complex tasks into subgoals, dynamically adjusting strategies as conditions change.

Tool Use and Orchestration: By connecting with APIs, databases, and enterprise applications, agents can select and sequence tools, essentially serving as workflow orchestrators.

Memory and Knowledge Integration: Through vector databases and long-term memory modules, agentic AI maintains context across sessions, enabling continuity and adaptive learning.

Meta-Cognition: Self-monitoring capabilities allow agents to detect errors, request clarification, or recalibrate their actions.

For data scientists, this means moving beyond model training and into the design of agentic architectures – compositions of reasoning modules, memory stores, orchestration frameworks, and human-in-the-loop controls.

Building agentic AI requires integrating multiple technical components, each pushing the boundaries of contemporary data infrastructure:

Large Language Models (LLMs): At the core of many agentic systems, LLMs provide the linguistic and reasoning substrate, enabling natural interaction and flexible task interpretation.

Planning Modules: Techniques such as chain-of-thought reasoning, Monte Carlo tree search, and neural-symbolic planning underpin agents' ability to strategize.

Memory Systems: Embedding stores (e.g., vector databases) capture historical context, while episodic and semantic memory architectures allow agents to maintain continuity.

Execution Layer: Agents require connectors to enterprise applications – CRM systems, cloud APIs, data warehouses – so they can enact changes in real-world systems.

Feedback Mechanisms: Reinforcement signals, user feedback, and self-evaluative heuristics guide iterative improvement.

The result is a layered architecture that blends statistical learning with symbolic reasoning and operational orchestration, an approach sometimes referred to as neuro-symbolic agent design.

The transition to agentic AI is not merely theoretical. Enterprises across verticals are experimenting with and deploying these systems:

Financial Services: Autonomous agents monitor market signals, adjust portfolio allocations, and hedge positions proactively, rather than waiting for analyst instructions.

Healthcare: Clinical decision agents integrate EHR data, patient monitoring feeds, and medical literature to recommend interventions – escalating cases proactively rather than passively reporting metrics.

Supply Chain and Logistics: Agents dynamically re-route shipments, renegotiate supplier contracts, and adjust inventory strategies in response to geopolitical disruptions or weather forecasts.

Customer Experience: Virtual service agents no longer wait for inbound inquiries – they proactively detect churn signals and initiate outreach campaigns.

Cybersecurity: Instead of generating alerts, agentic AI identifies anomalous activity, executes containment procedures, and coordinates with human analysts for escalation.

For data science professionals, these use cases highlight the necessity of integrating real-time data pipelines, explainability modules, and robust monitoring frameworks.

Despite their promise, agentic AI systems present formidable challenges:

Alignment and Control: Autonomous behavior raises questions of goal misalignment. Agents optimizing for efficiency may take actions contrary to business priorities or ethical standards.

Explainability: Recursive reasoning and tool orchestration can create opaque decision paths, complicating compliance and auditability.

Security: The same APIs that empower agents to act also expose potential attack surfaces. Adversarial exploitation of agentic workflows poses novel risks.

Scalability: Maintaining context across millions of interactions requires significant computational and data infrastructure investments.

Governance: Defining clear human-in-the-loop boundaries is essential to prevent over-delegation of authority.

These challenges underscore the role of data scientists not merely as model builders, but as AI system architects and risk stewards.

The rise of agentic AI redefines the professional toolkit:

Systems Thinking: Beyond feature engineering, practitioners must design feedback loops, orchestration layers, and governance structures.

Multi-Agent Coordination: Knowledge of distributed systems and game-theoretic dynamics becomes increasingly relevant as agents collaborate or compete.

Ethics and Policy Fluency: Data scientists must anticipate regulatory scrutiny and embed ethical principles into design patterns.

MLOps Evolution: Traditional MLOps practices expand into AgentOps – frameworks for monitoring, debugging, and continuously deploying autonomous agents.

Interdisciplinary Collaboration: Building agentic AI requires collaboration across machine learning, cognitive science, software engineering, and operations.

In effect, data scientists are transitioning into AI engineers of autonomy, tasked with shaping not only what models predict but how intelligent systems act.

Looking forward, several trends will shape the trajectory of agentic AI:

Integration with Multimodal Models: Agents will increasingly reason across text, image, video, and sensor data, enabling richer contextual understanding.

Standardization of Agent Frameworks: Emerging libraries (e.g., LangChain, AutoGPT, Microsoft Autogen) will mature into enterprise-grade platforms with compliance features.

Rise of Multi-Agent Ecosystems: Complex workflows will involve swarms of agents collaborating and negotiating tasks, requiring new protocols for coordination.

Hybrid Human-Agent Teams: Enterprises will design workflows where agents proactively propose actions, but final authority remains with human decision-makers.

Policy and Regulation: Governments are already signaling tighter oversight, particularly in finance, healthcare, and defense, where autonomous action carries outsized consequences.

Agentic AI represents a decisive step in the evolution of artificial intelligence. Where early systems required explicit prompts and rigid supervision, these new agents demonstrate initiative, adaptability, and context-awareness. For enterprises, the payoff is enormous: workflows that anticipate rather than react, digital collaborators that extend human capacity, and decision systems that dynamically align with shifting objectives.

For data science professionals, the challenge is equally significant. Building agentic AI demands fluency not only in algorithms and data pipelines but in system architecture, governance, and ethics. It requires rethinking the very role of AI in enterprise – from passive tool to active collaborator. As organizations move toward this frontier, the expertise of data scientists will be central in ensuring these systems are robust, aligned, and beneficial.

The age of Agentic AI has arrived, and with it, the responsibility to shape autonomous systems that serve human and organizational values while unlocking unprecedented levels of intelligence and productivity.

Article published by icrunchdata

Image credit by Getty Images, E+, MF3d

Want more? For Job Seekers | For Employers | For Contributors